A new paper is added to the collection of reproducible documents:

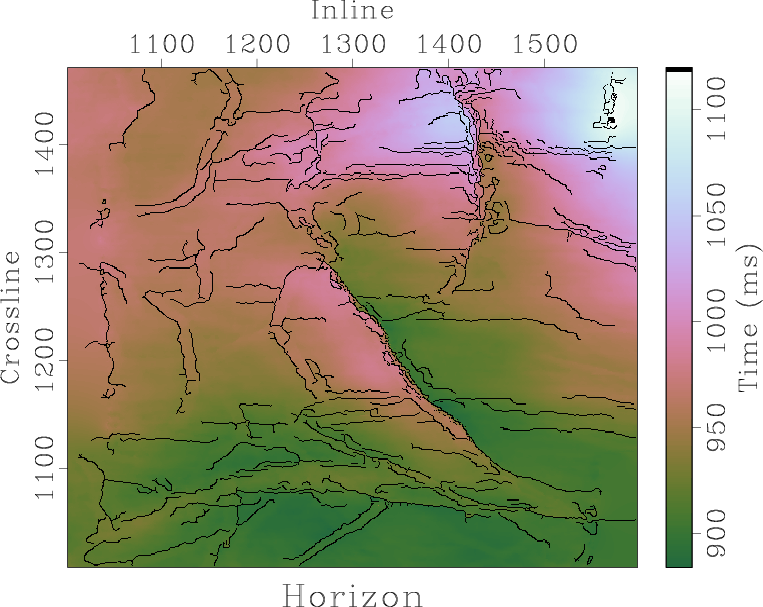

A robust approach to time-to-depth conversion and interval velocity estimation from time migration in the presence of lateral velocity variations

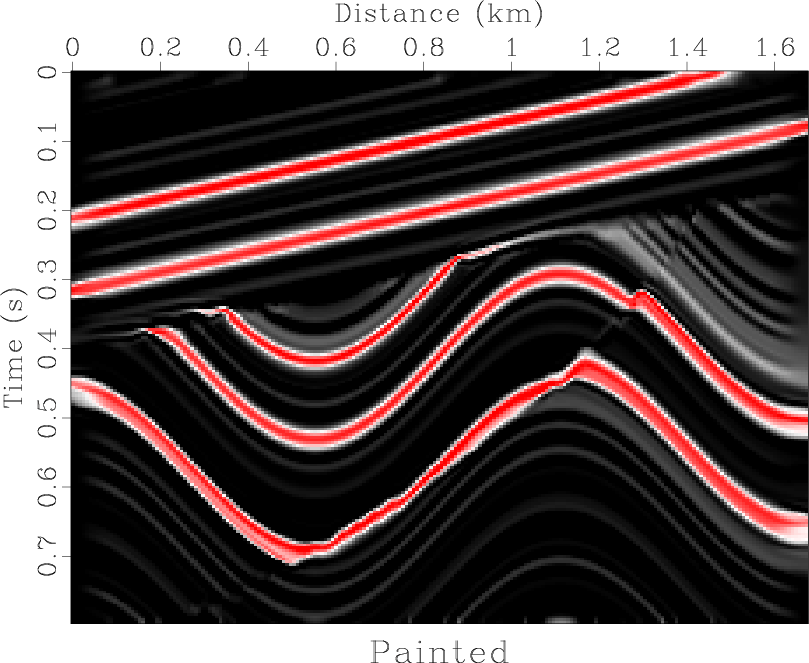

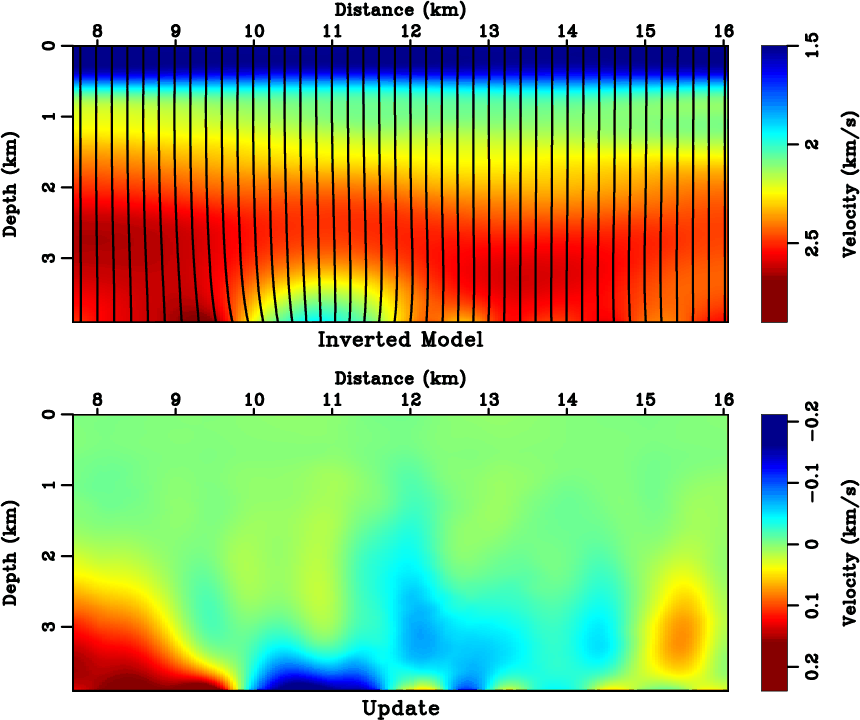

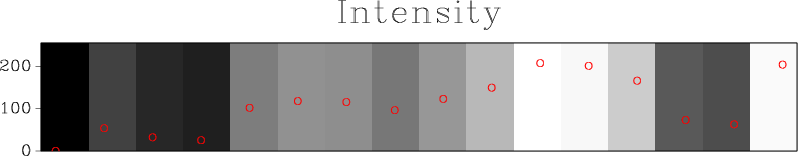

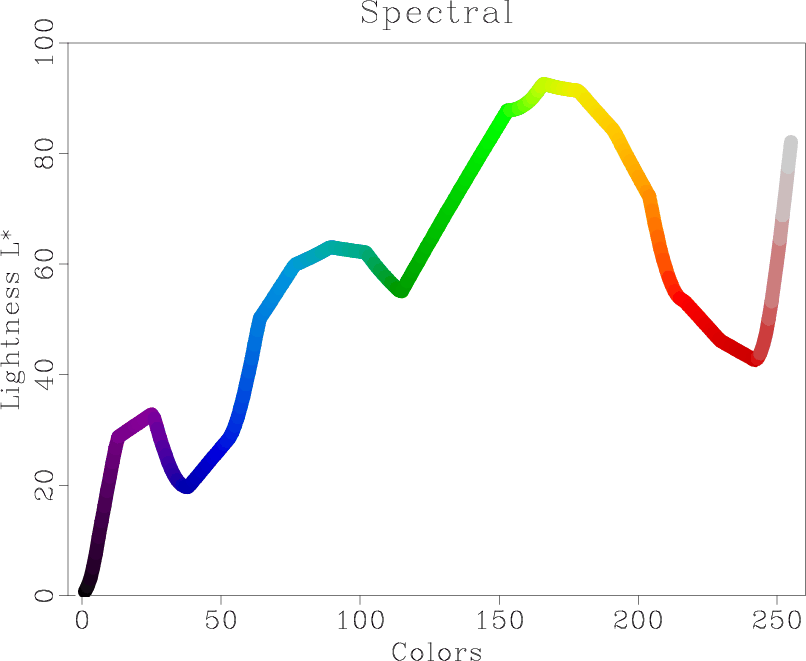

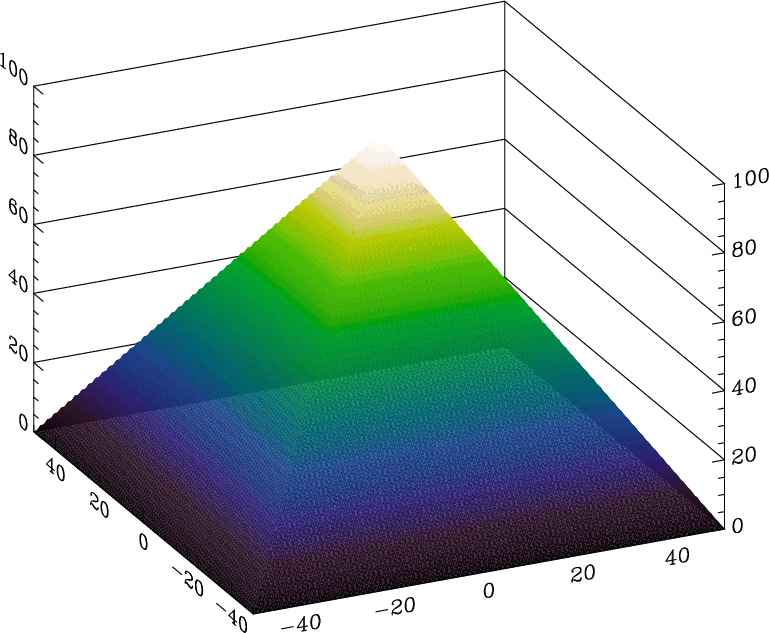

The problem of conversion from time-migration velocity to an interval velocity in depth in the presence of lateral velocity variations can be reduced to solving a system of partial differential equations. In this paper, we formulate the problem as a nonlinear least-squares optimization for seismic interval velocity and seek its solution iteratively. The input for inversion is the Dix velocity which also serves as an initial guess. The inversion gradually updates the interval velocity in order to account for lateral velocity variations that are neglected in the Dix inversion. The algorithm has a moderate cost thanks to regularization that speeds up convergence while ensuring a smooth output. The proposed method should be numerically robust compared to the previous approaches, which amount to extrapolation in depth monotonically. For a successful time-to-depth conversion, image-ray caustics should be either nonexistent or excluded from the computational domain. The resulting velocity can be used in subsequent depth-imaging model building. Both synthetic and field data examples demonstrate the applicability of the proposed approach.

Madagascar users are encouraged to try improving the results.

Madagascar users are encouraged to try improving the results.

Madagascar users are encouraged to try improving the results.

Madagascar users are encouraged to try improving the results.