Since infinite numbers of monkeys hitting keys on typewritters for an infinite amount of time are not usually available for employment, researchers do not proceed at random, but come up instead with a hypothesis, followed by experiments. Ideas may be stimulated by close acquaintance with the subjects and tools of the experiment, but by the very nature of things theory proceeds in time before the experiment, for else one would not know how to design that experiment.

Many theories are sequences of logical statements that can be divided into a number of discrete falsifiable fragments. One option is to postpone experiments until the whole theory is complete, with proofs of convergence, existence, and whatnot. It may be reasonable to argue that until the whole theory is formally complete in all its majesty, one cannot properly perceive the significance of the fragment in context, and experiments need to be designed with the whole theory in mind.

However, a researcher that would proceed in the forementioned way would only discover that he is significantly less productive than his peers! What happened??

Well, his peers probably did something else. Namely they tried to test individual, even incomplete pieces of the theory. If the experiment does not work, they will know quite early, and find workarounds or just give up the dead end and try another hypothesis — until finally they hit on something that works. During all this time that the theory-minded fellow has been pouring equations for a single theory, without knowing whether the inevitable approximations will not doom its application to real data. On top of that, his fellows had more contact with real data and more inspiration for their other theories!

The strategy of his peers resembles quite closely the “release early, release often” saying of the open-source world. It resembles Agile development strategies, in contrast to the the Waterfall model.

The “implement early, implement often” approach also brings to mind a saying from a completely different field: the popular “Cut the losers early, let the winners run” advice to investors. Researchers are much like investors, just that they invest time instead of money. This analogy is not merely interesting: it is useful. A lot of statistics were done about expected returns for investors, which are described better by Pareto distributions/power laws (80/20 rule, etc) than by symmetric Gaussian pdfs, so culling “losers” when they reach a threshold but keeping “winners” biases the expectation towards winners. It is quite straightforward (but tedious) to map investor gains to number of references in peer-reviewed journals, count references and interview researchers about the number of hypotheses tried and discarded, and map the finance-domain portofolio statistical work to the research-domain. Venture capital firms large enough, who need to evaluate and invest in researchers, may have already done that.

What is necessary in order to “implement early, implement often”? Most important, software should be quite usable (being learnable helps as well; yes, these are different things). In the case of a development platform, like Madagascar, where users may freely adapt existing programs, code needs to be clean and easy to understand as well; for this kind of software, code readability and implementation descriptions are more than good practice for future maintenance, but productivity enhancers for current users as well! Madagascar, known for these attributes, is already up to a good start. Bottom line: usability multiplies productivity much more than comes to mind at first thought (i.e. time saved in not looking up things), since hard-to-use software decreases the probability of the researcher putting an idea to the test at all, and instead results in him postponing the moment of coding, without realising the aggregate productivity loss!

In conclusion: let us get to know Madagascar well, implement early, and implement often!

The place of numerical experiments in the research workflow

May 28, 2007 Systems No comments

Assembling reports from papers

April 8, 2007 Documentation No comments

New Python/SCons functions defined in rsf/book.py together with LaTeX georeport class and LaTeX2HTML customization scripts defined in SEGTeX can now assemble reports from individual papers. See, for example, incomplete Madagascar documentation collected in a single PDF file or a web page. The script for generating these documents is book/rsf/SConstruct. Other examples are referenced on the redesigned wiki page for Reproducible Documents.

To try this feature:

1. Make sure you have the figures properly locked.

2.

and run

then

or

to generate a PDF file and read it or

to generate a web page installed in $RSFDOC.

You can also generate all books at once by running

in $RSFSRC/book. Any feedback is appreciated.

RSF School in Austin

April 2, 2007 Celebration No comments

Check out the preliminary program for the RSF School in Austin on Friday, April 20, 2007. Attendance is free and open. To register, please send a free form e-mail to rsfschool@gmail.com.

The low-hanging fruit in the forests of Madagascar

March 26, 2007 Celebration No comments

The predictive power of modern science that led to today’s technologies is not a lucky accident. The scientific method is based on a set of rigurously defined principles. One of them is that experiments have to be described in sufficient detail to be reproducible by other researchers within statistically justified errorbars.

Let numerically-intensive sciences be defined as those brances of science and engineering in which the experiment is conducted entirely inside a computer. These sciences benefitted directly from the extraordinary increase in computing capacity of the last few decades. Every “lifting” of the “ceiling” of hardware constraints has brought with it new low-hanging fruit, and the corresponding rush to pick it. With it came disorganization. In the gold rushes of yore, disorganization did not matter too much as long as gold was found by the bucket, but scientific principles and industrial methods took over as soon as there was not much left to fight over. The scientific method and engineering practice are invoked by necessity whenever the going gets tough.

Likewise is the case of reproducibility in numerically-intensive sciences. The other thing that exploded together with hardware capacity was complexity of implementation, which became impossible to describe on the necessarily limited number of pages of a paper printed on… paper. I will use seismic processing and imaging as a concrete example from now on. Whoever assumes that most numerical experiments published in peer-reviewed papers are reproducible, should try their hand at reproducing exactly the figures and numerical results from some of these papers. What? No access to the input data? No knowledge of the exact parameters? Not even pseudocode for the main algorithm, just a few equations and two paragraphs of text? Have to take the researcher’s word that the implementation of a competing method for comparison is state-of-the art? Ouch. We get to miss the articles from the 1970’s, when the whole Fortran program fit on two pages. But who cares, as oil is being found?

I read with interest the section dedicated to wide/multi azimuth surveys in a recent number of First Break. Such surveys are at the leading edge today, but, inevitably, they will become commoditized in a few years. It is worth noting that the vast majority of the theory they are based on has been mature since the mid-80’s. Indeed, such surveys are just providing properly sampled data for the respective algorithms. What next, though, after this direction gets exploited? What is left? Going after amplitudes? Joint inversions? Anisotropy? Absorbtion? Mathematically more and more complex imaging methods? 9-C? 10-C? The objective soul with no particular technology to sell cannot help seeing diminishing returns, increasing complexity, and the only way out being through more data.

The “more data” avenue will indeed work well, and finally fulfil some long-standing theoretical predictions which did not work out for lack of sampling, but guess what: after we’ll get to full-azimuth over-under Q system surveys, with receivers in the well/water column and on the ocean botom too, the “more data” avenue for improvement will shut. That may happen even sooner, should oil prices or exploration resource constraints make it uneconomical. Then, in order to take our small steps forward, we will need to start getting pedantic about the small details such as the scientific method and reproducibility. And it is then that we will discover that the next low-hanging fruit is actually reproducibility.

No matter how big today’s gap between having just a paper and having its working implementation, this gap is actually easy to fill. That is because the filling has existed and was thrown away. The scripts, data and parameter files needed to create the figures have existed, and the methodical filing of them incurs only a small marginal time cost. All is needed is to chain those scripts together to perform automatically. This means that, for the first time, it is possible to give to other researchers not only the description of the experiment, but the experiment itself. Really. As if a physicist could clone his actual lab, complete with a copy of himself that can redo the experiment. Imagine the productivity boost that this would provide to physicists! Such boosts are actually available to geophysicists. Experiments that were once at the frontiers of science will be commoditized, and the effort moved to where more value is added. The frontiers will be pushed forward.

What is needed for a reproducible numerical experiment? To start with, publicly available datasets. Thankfully, those already exist. The next step is having reference implementations of geophysical algorithms, that anybody can take and implement their additions on, and use for comparisons. In other words, any package released under an open-source license as defined by the OSI. The software should foster a community that will balance its interests so that all advance. To illustrate with a well-known debate (now winding down), Kirchhoff proponents would want others to have a good quality Kirchhoff implementation as a comparison to their Wavefield-Extrapolation algorithms, and the WE people would do the same for their own methods, and in the end all reference implementations will be very good. The network effect will enhance the usefulness, completness and quality of the software: the more participants, the more and better algorithms and documentation. The tragedy of commons in reverse. For more reasons on why particular people and institutions in our field would adopt open source, see Why Madagascar.

I believe Madagascar does have the incipient qualities for becoming the Reference Implementation of Geophysical Algorithms. Should we have such high ambitions? Should we adopt this as a easy-to-remember motto? Madagascar: the Reference Implementation of Geophysical Algorithms. I think it sounds good, and something like that in big bold letters is needed on the main page, above the tame and verbose mission statement. Maybe some day Madagascar will fulfill the motto and grow enough to become institutionalized, i.e. become a SEG Foundation (SF) or something 🙂

This was fun – but I want to rehash the main idea of this rant: in a few years algorithm details will start to matter, people will need reproducibility to get ahead, and Madagascar is ideally positioned to take advantage of this!

Are the times good, or what?

madagascar-0.9.4

March 15, 2007 Celebration 3 comments

New stable version is released. The previous stable version was downloaded from SourceForge 688 times. It is more difficult to keep track of Subversion accesses.

Version 0.9.4 features simplified and improved installation in addition to numerous new modules and examples. It has been tested, so far, on

- RedHat Linux

- Ubuntu Linux

- OpenSuSE Linux

- Mandriva Linux

- Solaris

- FreeBSD

- Cygwin on Windows NT

- SFU on Windows NT

F-K AMO

March 13, 2007 Documentation No comments

Another old paper has been added to the collection of reproducible papers:

Imaging overturning reflections

March 12, 2007 Documentation No comments

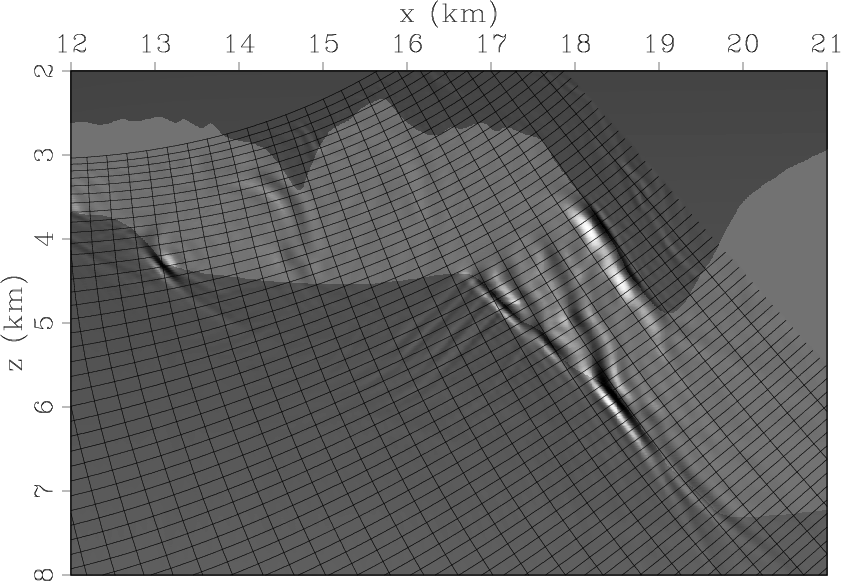

Paul Sava contributes another not so old paper to the collection of reproducible papers:

Madagascar programs guide: sfscale

March 12, 2007 Documentation No comments

A short section on sfscale has been added to the Madagascar programs guide.

Thanks to Nick Vlad for filling other missing entries in the guide!

Reproducibility page on the Wiki

March 12, 2007 Documentation No comments

Whereas introductions to reproducibility are provided in passing on various pages that described how to do it, there is no single central point describing what reproducibility is. You know, a little page to introduce someone to the concept, without reference to any particular implementation. Something to link from the “reproducibility” word when it appears on the Wiki. A “reproducibility business card”.

Also, I felt a need to share with others the links to other people’s reproducibility implementations/ideas I have gathered lately. I considered contributing to the Reproducibility page on Wikipedia, but reproducibility in computational science is a bit different from reproducibility in general, in that it is possible not only to describe the experiment, but to actually provide its full implementation, ready to be run at any time!

Hence, a dedicated Madagascar Wiki page on reproducibility! Right now it lives in the Sandbox. Please feel free to edit it and add to it. I will continue to do so myself as well, but for now I was hoping other people have more raw material (i.e. links to other reproducibility implementations, or good articles describing the concept).

Time-shift imaging condition

March 11, 2007 Documentation No comments

Another not so old paper has been added to the collection of reproducible papers: