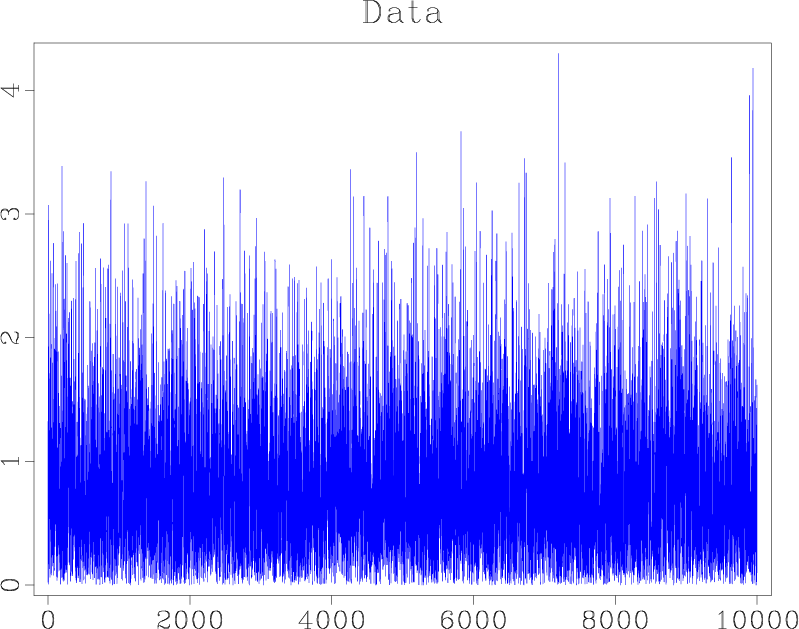

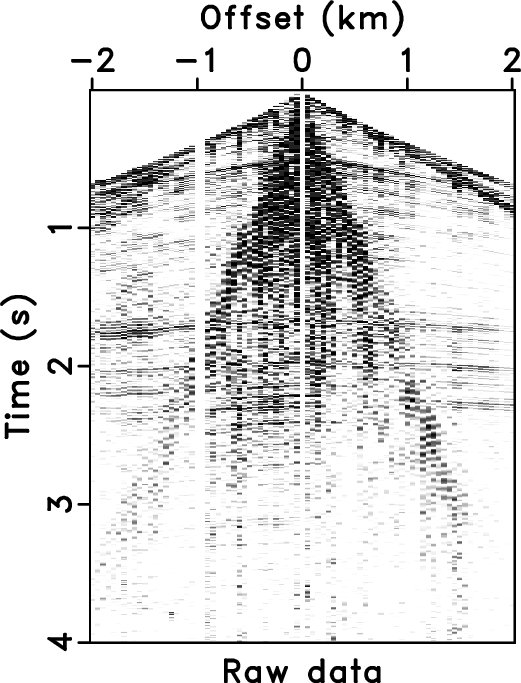

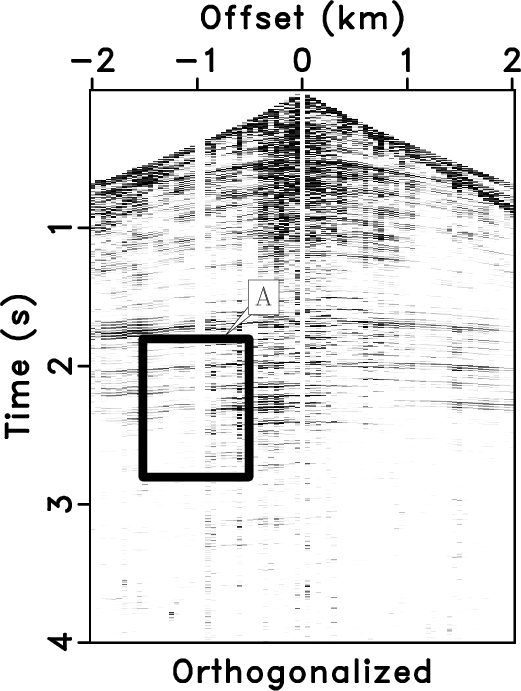

Joe Dellinger, the author of Vplot, suggests adjusting parameters for raster figures when including them in Word documents. He writes:

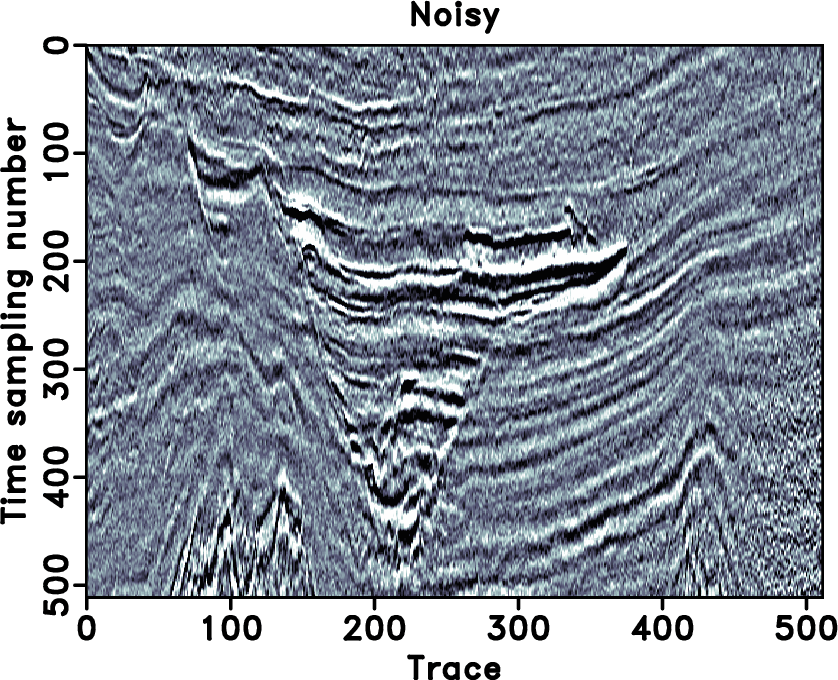

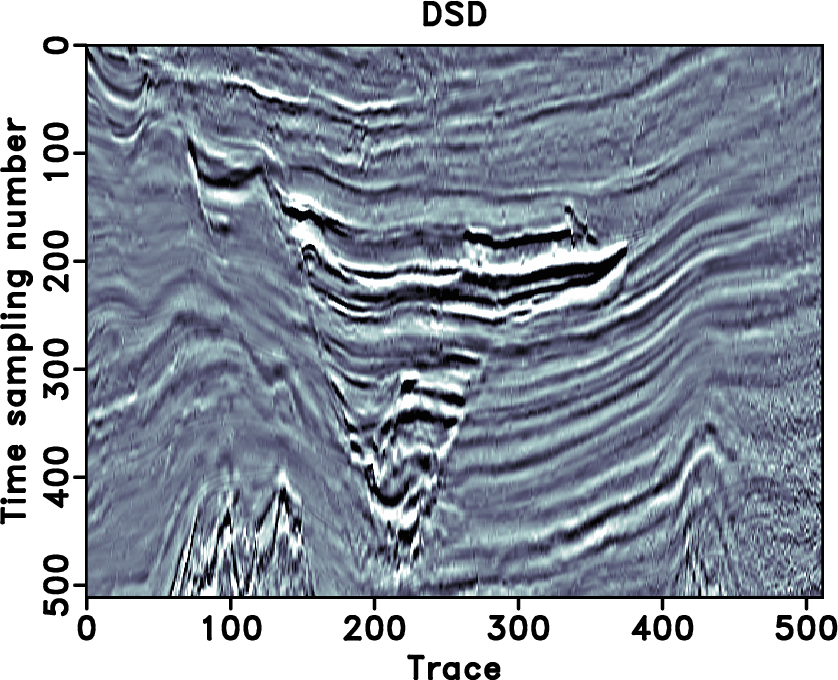

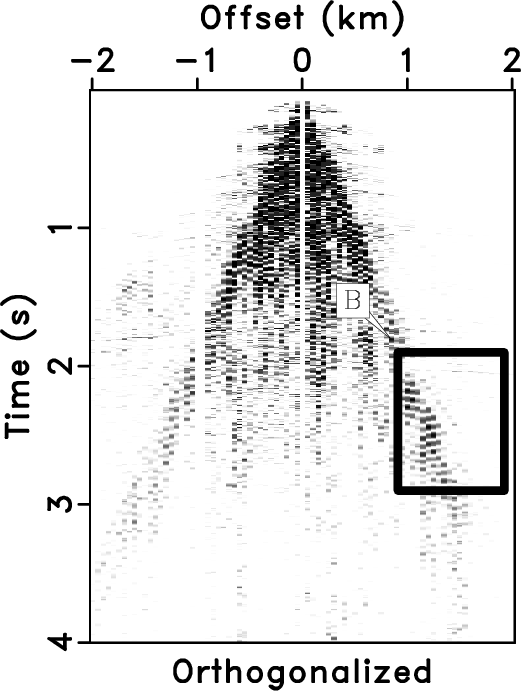

Wow, working on my SEG abstract I had a helluva time getting my vplot raster figures to look decent in word. Then I realized… wait a minute, it’s doing just the bad things plotters back in the 80’s were doing. I fiddled a little with pixc and greyc, and voila! Beautiful raster figures.

From the Vplot documentation:

pixc is used only when dithering is being performed, and also should only be used for hardcopy devices. It alters the grey scale to correct for pixel overlap on the device, which (if uncorrected) causes grey raster images to come out much darker on paper than on graphics displays.

greyc is used only when dithering is being performed, and really should only be used for hardcopy devices. It alters the grey scale so that grey rasters come out on paper with the same nonlinear appearance that is perceived on display devices.

The default values are pixc=1 greyc=1. The values used by Joe in his Word document were pixc=1.15 greyc=1.25.

To convert Vplot plots to other forms of graphics, you can use vpconvert.

See also: