An old paper is added to the collection of reproducible documents: Multichannel adaptive deconvolution based on streaming prediction-error filter

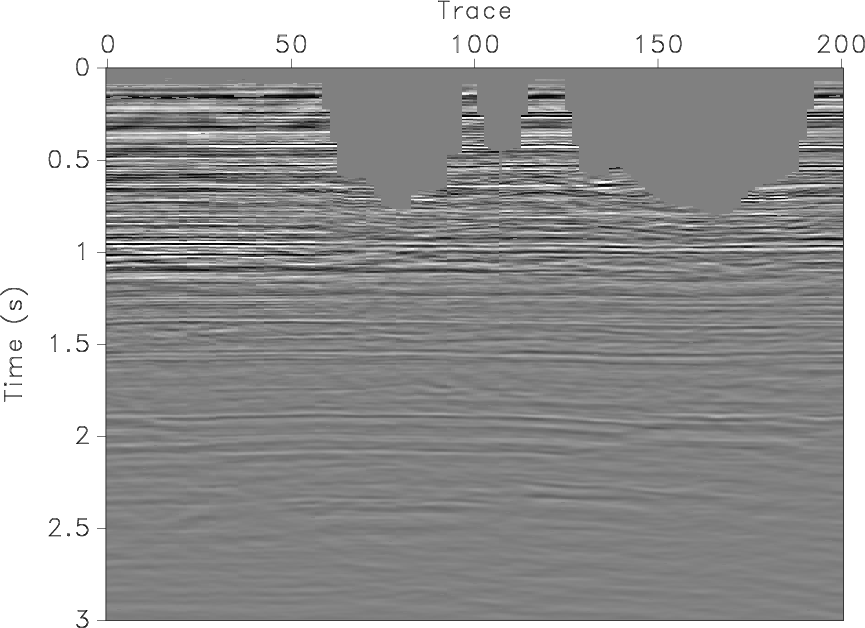

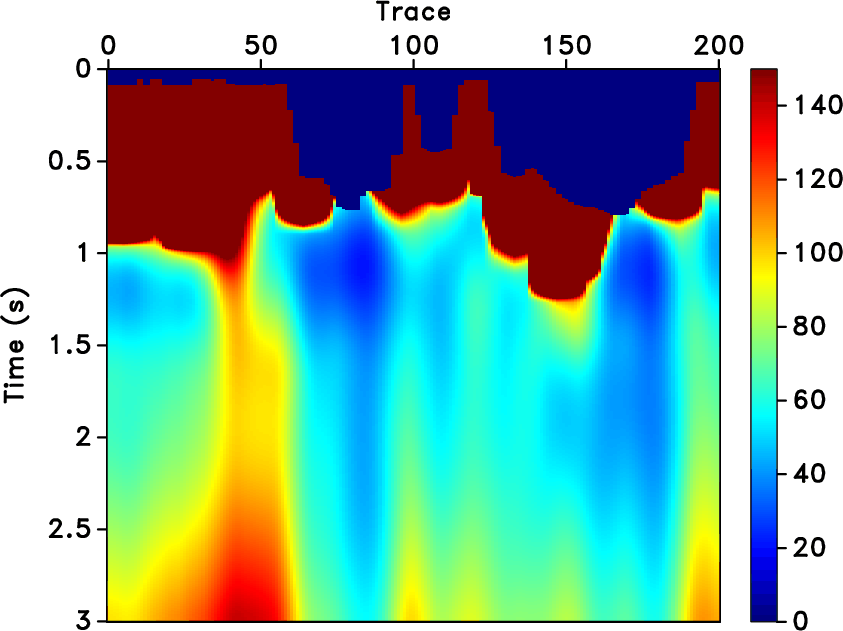

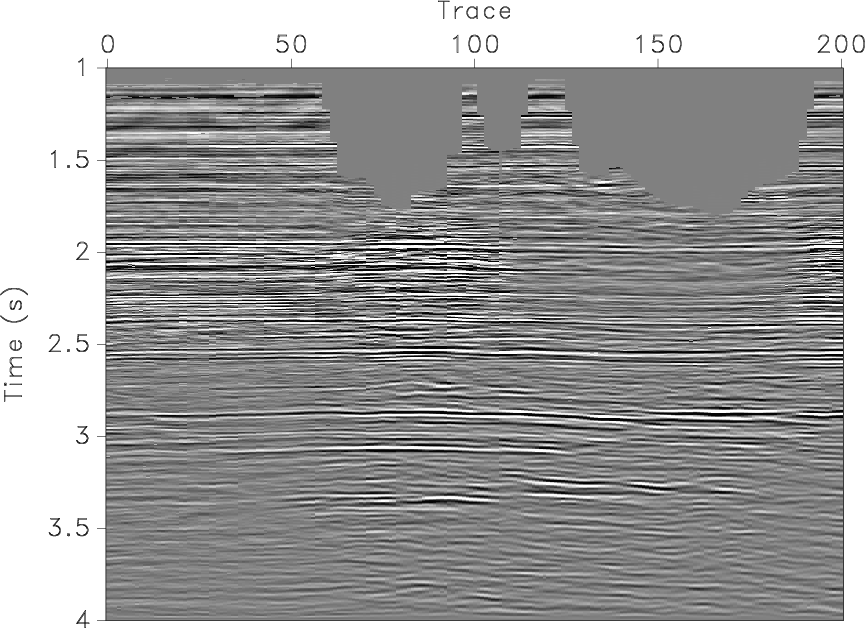

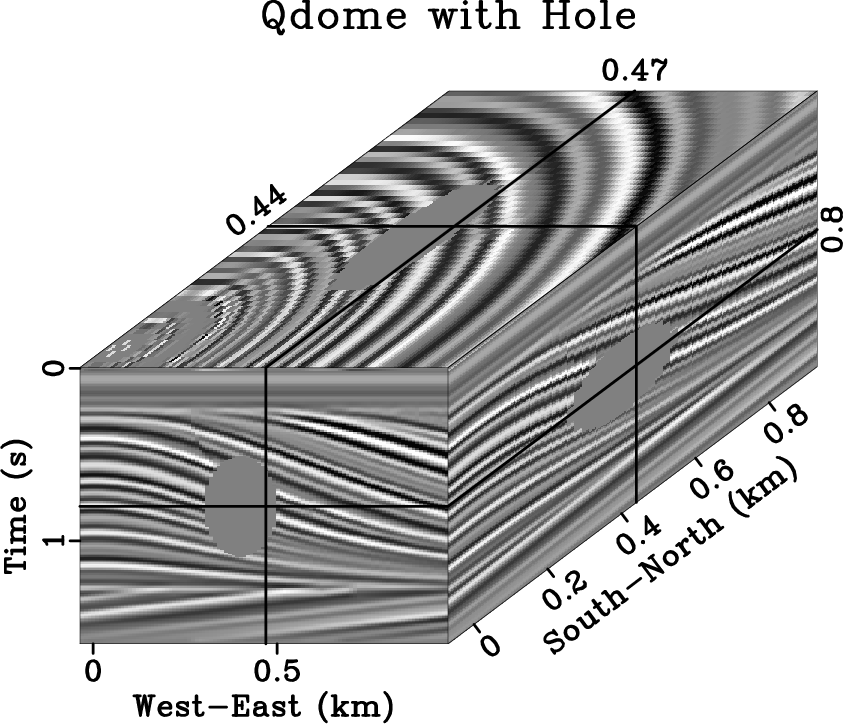

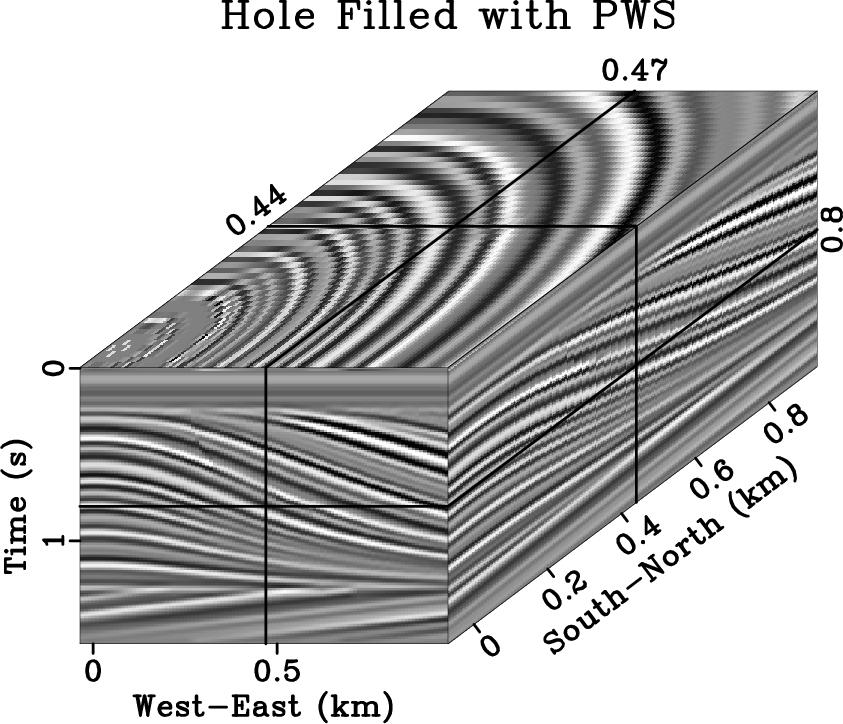

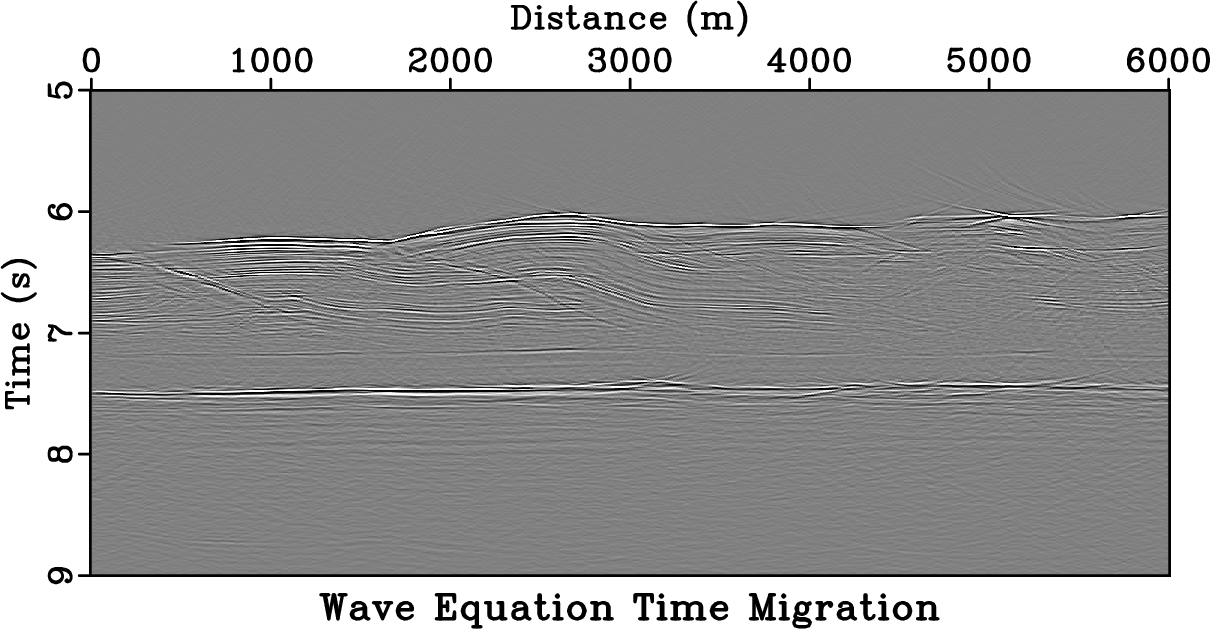

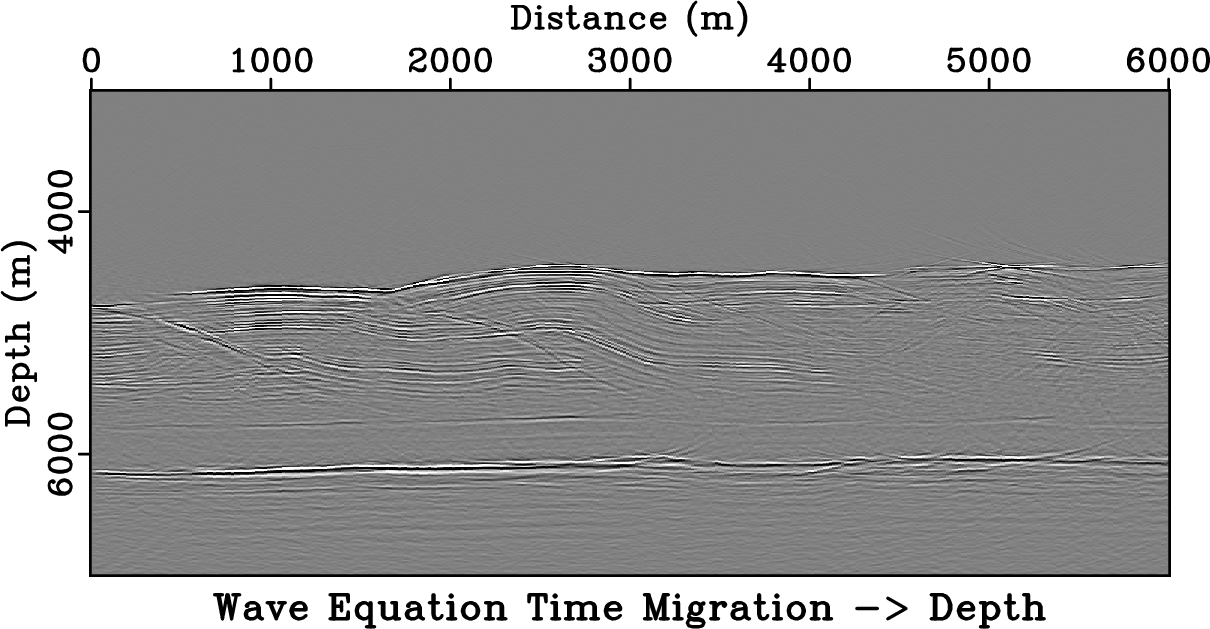

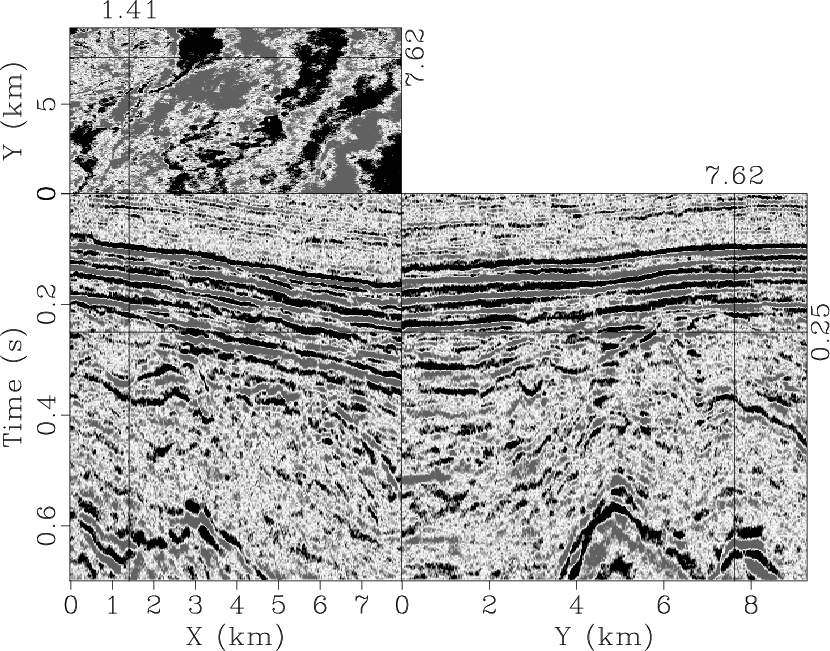

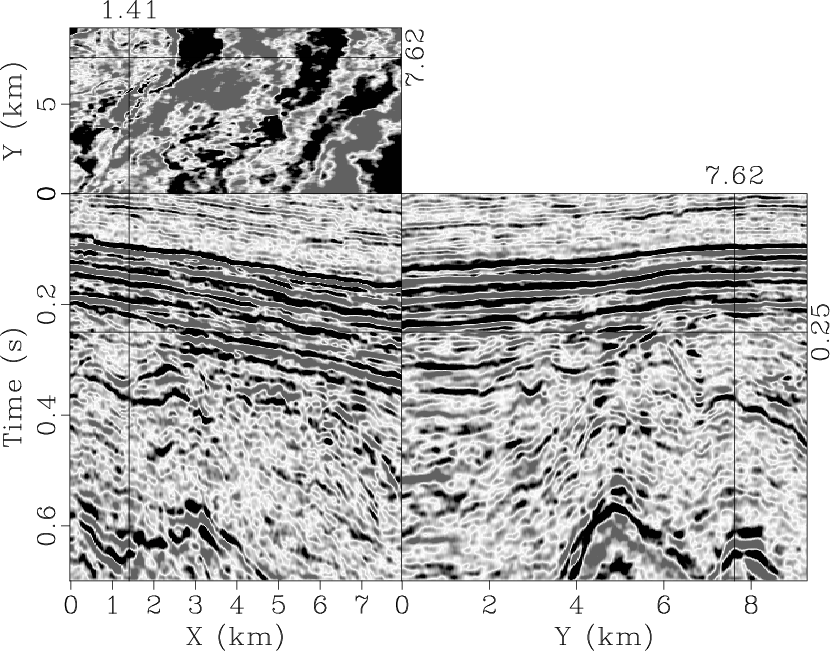

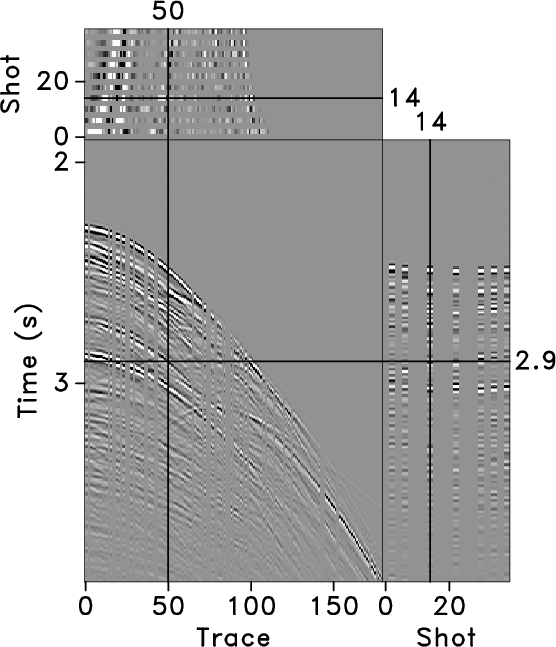

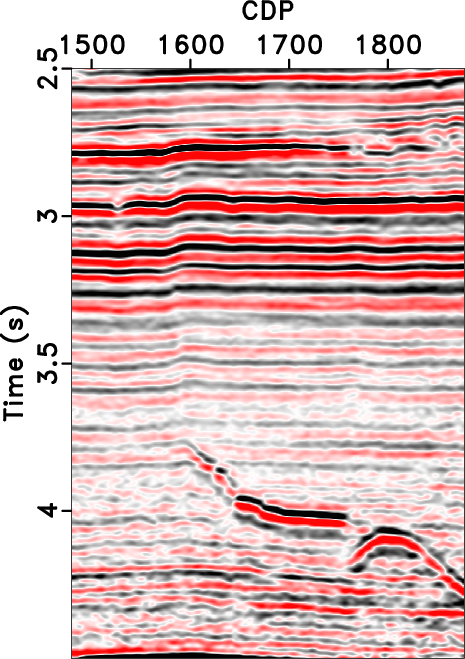

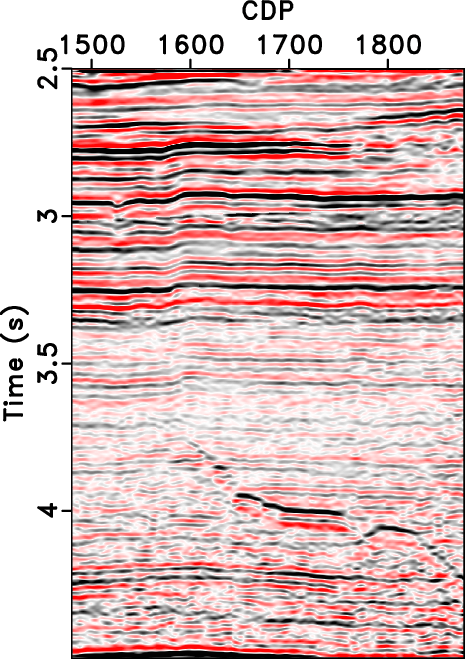

Deconvolution mainly improves the resolution of seismic data by compressing seismic wavelets, which is of great significance in high-resolution processing of seismic data. Prediction-error filtering/least-square inverse filtering is widely used in seismic deconvolution and usually assumes that seismic data is stationary. Affected by factors such as earth filtering, actual seismic wavelets are time- and space-varying. Adaptive prediction-error filters are designed to effectively characterize the nonstationarity of seismic data by using iterative methods, however, it leads to problems such as slow calculation speed and high memory cost when dealing with large-scale data. We have proposed an adaptive deconvolution method based on a streaming prediction-error filter. Instead of using slow iterations, mathematical underdetermined problems with the new local smoothness constraints are analytically solved to predict time-varying seismic wavelets. To avoid the discontinuity of deconvolution results along the space axis, both time and space constraints are used to implement multichannel adaptive deconvolution. Meanwhile, we define the parameter of the time-varying prediction step that keeps the relative amplitude relationship among different reflections. The new deconvolution improves the resolution along the time direction while reducing the computational costs by a streaming computation, which is suitable for handling nonstationary large-scale data. Synthetic model and filed data tests show that the proposed method can effectively improve the resolution of nonstationary seismic data, while maintaining the lateral continuity of seismic events. Furthermore, the relative amplitude relationship of different reflections is reasonably preserved.