Another old paper is added to the collection of reproducible documents:

Test case for PEF estimation with sparse data II

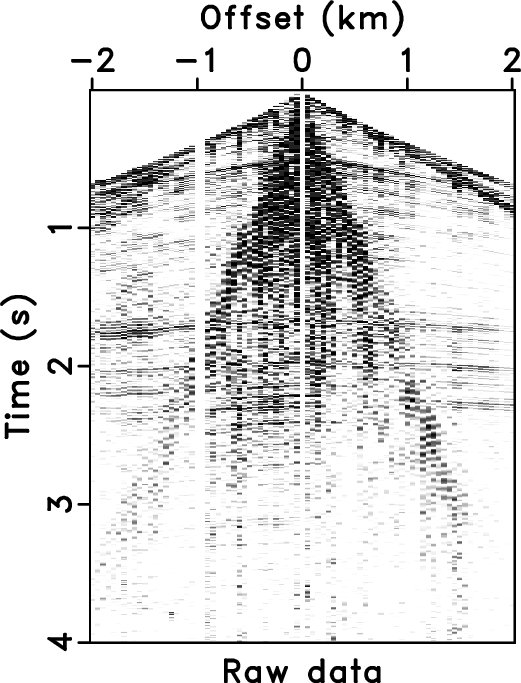

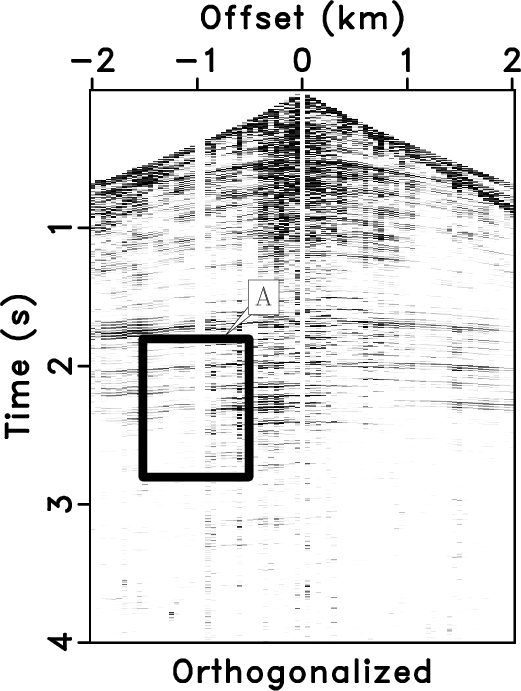

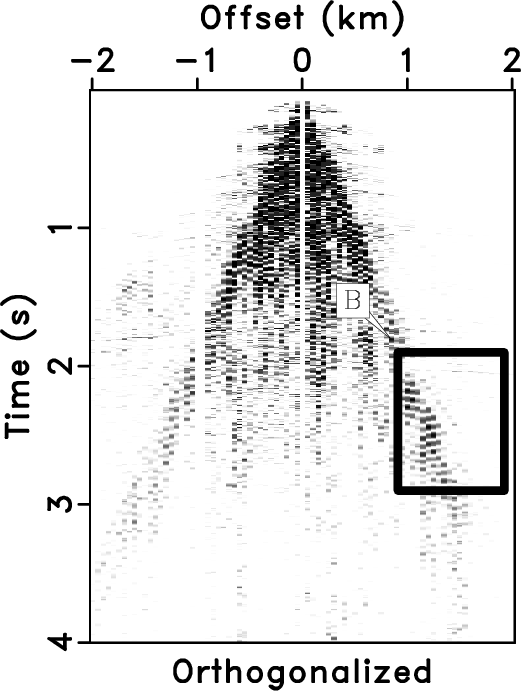

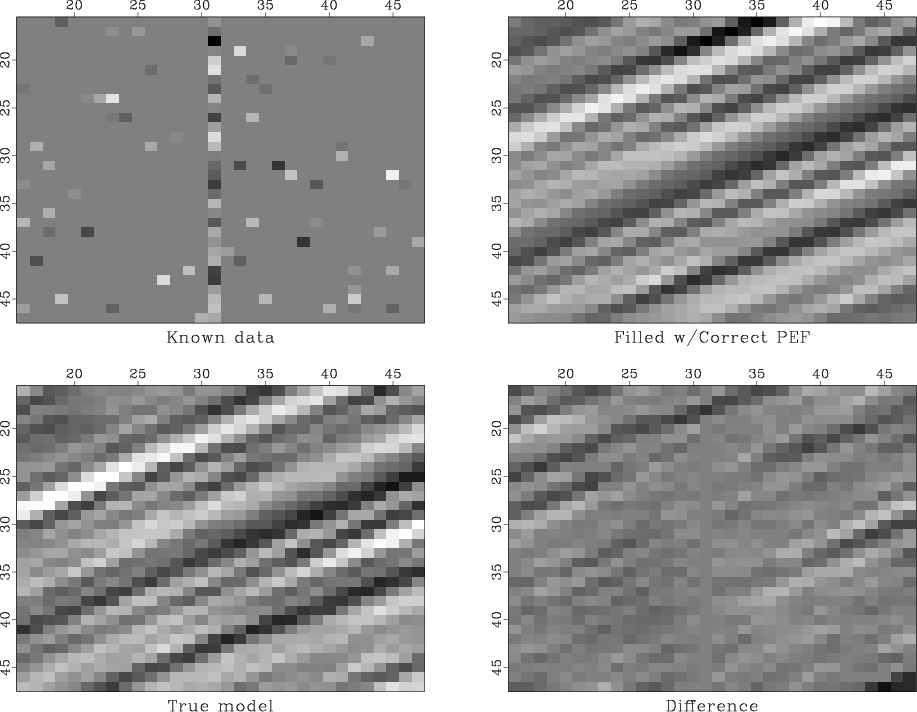

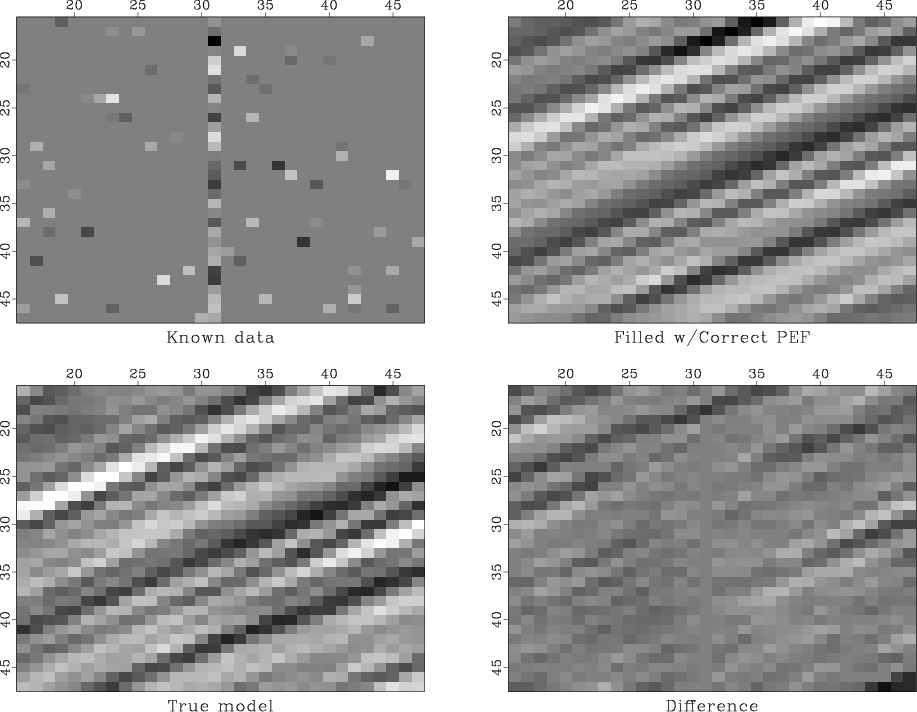

The two-stage missing data interpolation approach of Claerbout (1998) (henceforth, the GEE approach) has been applied with great success (Fomel et al., 1997; Clapp et al., 1998; Crawley, 2000) in the past. The main strength of the approach lies in the ability of the prediction error filter (PEF) to find multiple, hidden correlation in the known data, and then, via regularization, to impose the same correlation (covariance) onto the unknown model. Unfortunately, the GEE approach may break down in the face of very sparsely-distributed data, as the number of valid regression equations in the PEF estimation step may drop to zero. In this case, the most common approach is to simply retreat to regularizing with an isotropic differential filter (e.g., Laplacian), which leads to a minimum-energy solution and implicitly assumes an isotropic model covariance.

A pressing goal of many SEP researchers is to find a way of estimating a PEF from sparse data. Although new ideas are certainly required to solve this interesting problem, Claerbout (2000) proposes that a standard, simple test case first be constructed, and suggests using a known model with vanishing Gaussian curvature. In this paper, we present the following, simpler test case, which we feel makes for a better first step.

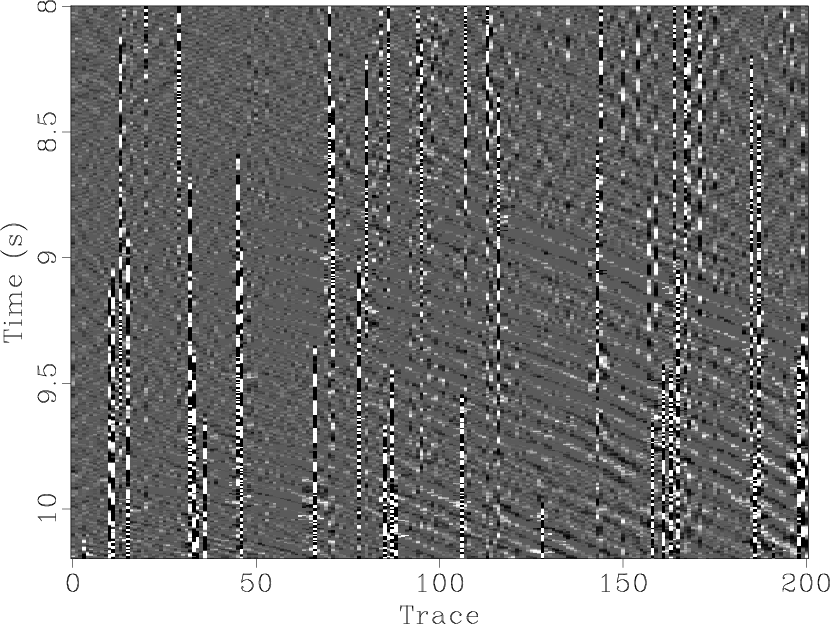

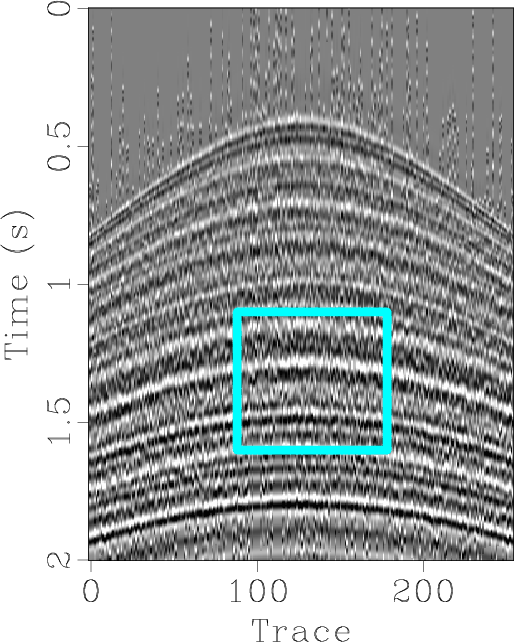

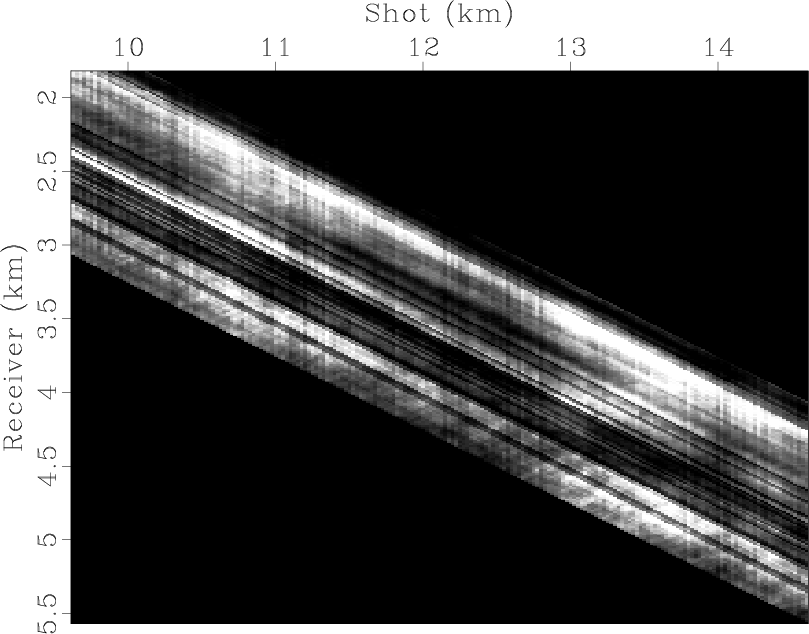

- Model: Deconvolve a 2-D field of random numbers with a simple dip filter, leading to a “plane-wave” model.

- Filter: The ideal interpolation filter is simply the dip filter used to create the model.

- Data: Subsample the known model randomly and so sparsely as to make conventional PEF estimation impossible.

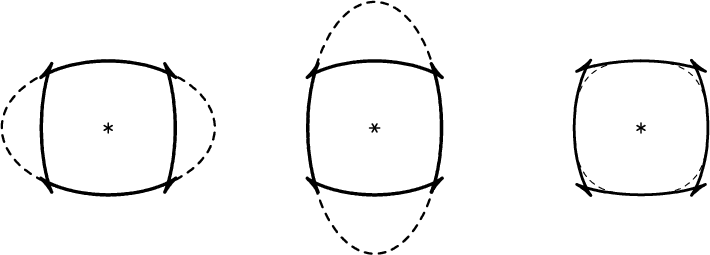

We use the aforementioned dip filter to regularize a least squares estimation of the missing model points and show that this filter is ideal, in the sense that the model residual is relatively small. Interestingly, we found that the characteristics of the true model and interpolation result depended strongly on the accuracy (dip spectrum localization) of the dip filter. We chose the 8-point truncated sinc filter presented by Fomel (2000). We discuss briefly the motivation for this choice.