Australia’s population is smaller than the population of Texas, yet Australia’s surface area is more than ten times larger than Texas. Australia is not only one of the top ten countries by proven natural gas reserves (the full list is Iran, Russia, Qatar, Turkmenistan, USA, Saudi Arabia, Venezuela, Nigeria, Algeria, Australia) but also one of the top ten countries in visits to the Madagascar website (the full list is USA, China, Canada, UK, Brazil, Germany, India, Saudi Arabia, France, Australia).

The Madagascar school in Melbourne, Australia, took place on August 15 and was organized by Jeff Shragge from UWA as a workshop at the 2013 ASEG-PESA meeting. The school attracted nearly 20 participants who listened to presentations and worked through interactive exercises. Jan Stellingwerff from dGB demonstrated the Madagascar interface in OpendTect. The school materials are available now on the website.

Madagascar school in Melbourne

August 25, 2013 Celebration No comments

Cube-helix color palette

August 23, 2013 Examples No comments

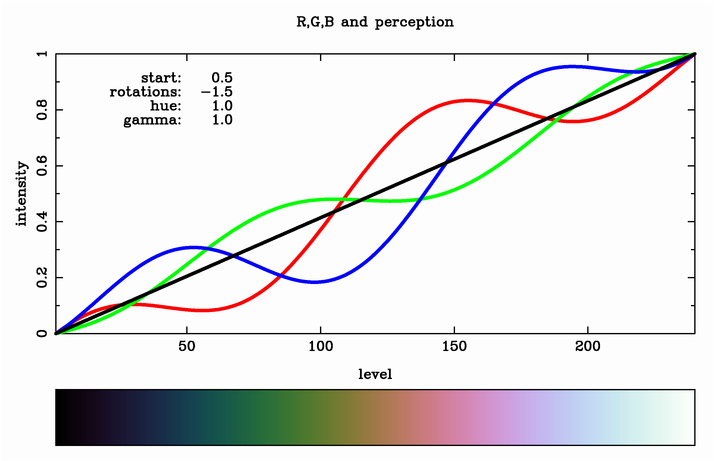

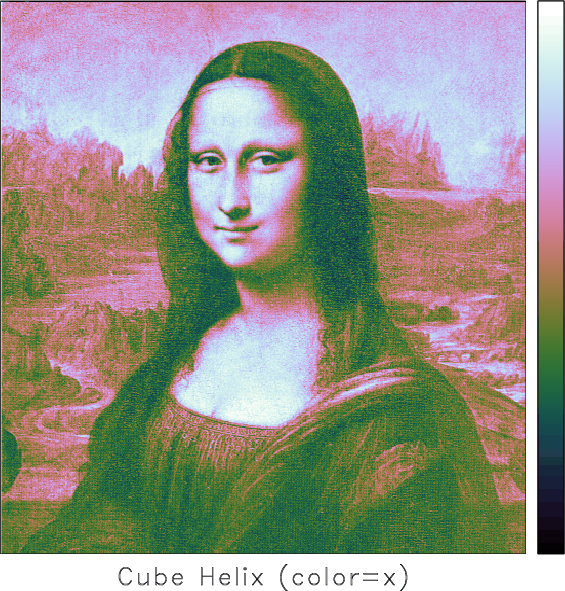

The “cube helix” color palette was suggested by Dave Green for displaying astronomical intensity. This pallette is designed to be monotonically increasing on terms of its perceived brightness, which results in a nice greyscale when printed on a black and white printer.

Now this palette is available to Madagascar plotting programs, such as sfgrey, as color=x. See the following example from rsf/rsf/sfgrey:

More information:

Spectral recomposition

August 9, 2013 Documentation No comments

A new paper is added to the collection of reproducible documents:

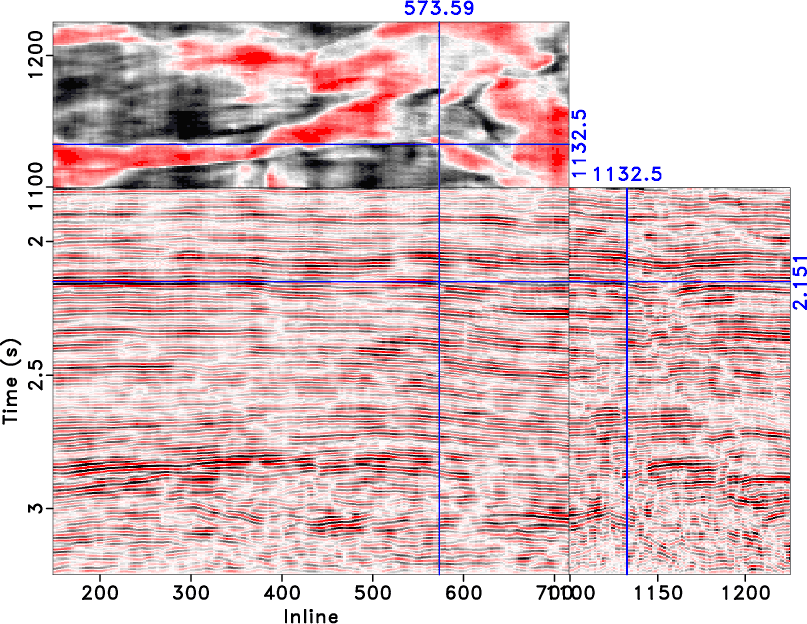

Automated spectral recomposition with application in stratigraphic interpretation

Analyzing seismic attributes in the frequency domain is helpful for reservoir characterization. To analyze the reservoir interval of interest in detail, it is important to capture the seismic response at each frequency subset. Spectral recomposition can be used to extract significant components from the seismic spectrum. We propose a separable nonlinear least-squares algorithm for spectral recomposition, which estimates both linear and nonlinear parts automatically in separate steps. Our approach is applied to estimate fundamental signal parameters, peak frequencies and amplitudes, with which the seismic spectrum can be reconstructed. Automated spectral recomposition helps us visualize frequency-dependent geological features on both cross sections and time slices by extracting significant frequency components. Spectral recomposition can also indicate how frequency contents attenuate with time.

Omnidirectional PWD

August 9, 2013 Documentation No comments

A new paper is added to the collection of reproducible documents:

Omnidirectional plane-wave destruction

Steep structures in seismic data may bring directional aliasing, thus plane-wave destruction (PWD) filter can not obtain an accurate dip estimation. We propose to interpret plane-wave construction (PWC) filter as a line-interpolating operator and introduce a novel circle-interpolating model. The circle-interpolating PWC can avoid phase-wrapping problems, and the related circle-interpolating plane-wave destruction (PWD) can avoid aliasing problems. We design a 2D maxflat fractional delay filter to implement the circle interpolation, and prove that the 2D maxflat filter is separable in each direction. Using the maxflat fractional delay filter in the circle interpolation, we propose the omnidirectional plane-wave destruction (OPWD). The omnidirectional PWD can handle both vertical and horizontal structures. With a synthetic example, we show how to obtain an omnidirectional dip estimation using the proposed omnidirectional PWD. An application of the omnidirectional PWD to a field dataset improves the results of predictive painting and event picking, as compared to conventional PWD.

Reproducible research and IPython notebooks

August 6, 2013 Systems No comments

Reproducible science was one of the main general themes at the recent SciPy conference in Austin. While different tools for accomplishing reproducible research are being proposed, IPython notebooks are often mentioned as one of the main tools. In his review of the meeting, Eric Jones of Enthought writes:

We can safely say that 2013 is the year of the IPython notebook. It was everywhere. I’d guess 80+% of the talks and tutorials for the conference used it in their presentations.

As illustrated by the screenshots below, it is possible to combine SCons-based processing workflows with IPython notebooks.

The notebook used in this example is in rsf/rsf/test/test.ipynb

How far is Madagascar from being a production system?

August 4, 2013 FAQ 6 comments

Madagascar was set up by researchers for researchers, and this gives it some unique qualities. Sometimes user questions on mailing lists and in-person communications to people involved with the project reflect attempts to examine to what extent madagascar can be used for seismic data processing and imaging in a production environment. This post does not attempt to highlight the qualities that Madagascar already has — the wiki and blog are dedicated to that. It is not a feature request list from the main developer(s) either. It is an attempt to clarify the current usability limits of the package when it comes to production. It is also a maximal list — I am not aware of a single production system that has all the features below. So, what is the current delta between Madagascar and an optimal production system? In no particular order:

– Dataset exploration tools. Click on this trace, on that one, plot headers over data in an interactive workflow… be able to understand the data quickly. Be able to try many encodings quickly for headers and for data, like an automated lock picker. Ancient packages who have accrued decades of lessons of encountering messed-up SEG-Y have a strong advantage here. Also, may need to handle SEG-D and other arcane formats.

– Geometry. Original data is in a global x-y coordinate system (usually UTM, but there are others too). Most work is done on the inline-crossline grid. Migration occurs on a physical units grid parallel to the inline-crossline grid. Various steps may employ different such local coordinate systems. Each of these can be left-handed, with negative increments, etc. The project history needs to know at every moment what coordinate system the data is in (with a pointer to its unambiguous definition) and to transform between all of them easily (including the rotations).

– Autopicking, manual picking and interactive pick editing tools.

– Dealing with borehole logs, horizons, and other such “foreign objects”

– Centralized job submission and logging — the system remembers the script for each job (including what internal or external client it has been run for — for billing purposes). In madagascar the history file helps somewhat, but sometimes it is difficult to reconstitute the flow because a job may involve more than a file, and not all parameters are written to the history file. Having a centralized job database, and writing to header which job created the file, is much more effective.

– Separation of I/O and algorithms. The algorithms and the I/O are mixed in m8r programs. Instead there should be procedures (subroutines/functions) that are independent from the I/O form used, with drivers that can do I/O. This prevents reusing the same code between programs, and also prevents the reuse of subroutines in flow-based processing (allocating once, then calling procedures that act on the same ensemble of data). This applies even to the humble Mclip.c on the wiki. The “business logic” should be incapsulated into one or more libraries per user, and these should be installed in $RSFROOT/lib, so they can be called from other user directories without code duplication or symlinks.

– Parameter registration, so each program can be interrogated: “what types of files do you need for input, how many dimensions, etc”, “what are reasonable parameter ranges”, etc, and incompatibilities can be automatically spotted at the start-up check, instead of a week into the workflow.

– Large-scale distributed: (1) data re-sorting based on headers, and (2) multidimensional transpose. Sorting and transposing data to create the input for some types of migration may currently take much more than the migration itself

– Some common processing tools (f-x-y decon, etc)

– Widespread openmp parallelization of programs. Having procedure in place that ensure thread safety, and consistent conventions about the level at which shared memory parallelization happens.

– A generic parallelization framework with production features (ability to launch in small batches, re-send failed jobs to other nodes, smart distributed collect with checkpointing, adding and subtracting nodes during the job, preparing HTML or PNG reports as to the state of the job upon a query, etc). A full list of features for this item can be quite long.

– Package-wide conventions, i.e. let’s use only full offsets in all CLI parameters for all programs, no half offsets.

– Hooks for interfacing with a queuing system (OpenPBS/etc). Users should not have the permissions to ssh/rsh directly into the nodes of a properly set up cluster.

– A GUI for setting up flows so CLI-challenged people can still be productive, and newbies can discover system features easily. Of course, the system should still be fully scriptable. Like any software, this GUI needs to be used by a number of people large enough so that bugs are ironed out, otherwise it will just be bypassed because of buginess.

– Visualization with zoom and interactive annotations in 2-D, interactive visualization of 3-D data volumes (regularly and irregularly sampled). Or, even better, the ability to output in a format that can use open source third party visualization, using geophysical or medical imaging visualization packages.

I am sure there are many other features that can be added to this list. This is just my brain dump at this moment.

Again — the above enumeration was neither a criticism of madagascar, nor a request for work in these directions (as the needed quantity of work for some of them may be enormous). These are not flaws — m8r is an excellent research, learning and technology platform.

The reasons why established companies have not contributed such features to madagascar (or other open-source projects with a living community) is of course that they already had somewhat satisfactory software solutions for them, otherwise they couldn’t have been in business. Then, the only hope was from startups. Startups however, even when they do not explicitly sell their code, they think of being able to sell the company to someone who might want to do that, and might view non-GPL software as a more valuable asset. So no wonder there have been so few contributions in the above directions. The correct solution to this quandary is companies recognizing that, like the operating system, none of this infrastructure provides a competitive advantage (algorithm kernels do), and thus sharing costs with other companies is the logical thing. The disadvantage of reducing the entry barrier to newcomers is balanced by the improved quality of the platform through collaboration and a larger user base, having new hires already proficient with it (both as users and as developers), and saving money that can be directed to core business.

Results may vary

August 3, 2013 Links No comments

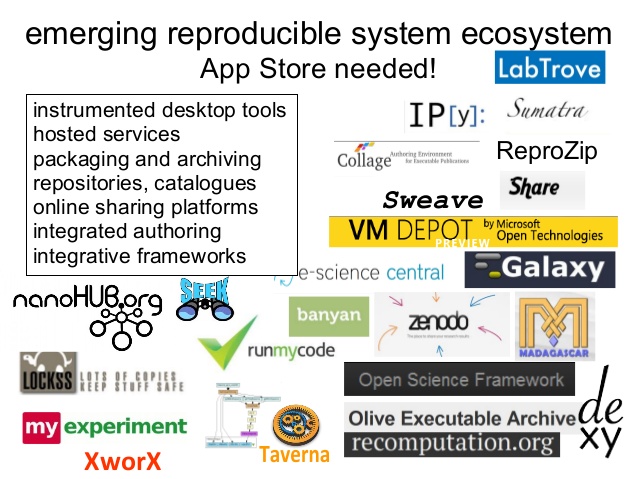

Slide about the emerging reproducible research ecosystem from Carole Goble’s keynote presentation at a computational biology conference last month. The presentation summarizes the issues involved in reproducible research and the recent progress.

ISMB/ECCB 2013 Keynote Goble Results may vary: what is reproducible? why do open science and who gets the credit? from Carole Goble

See also a presentation sketch by Jenny Chen.

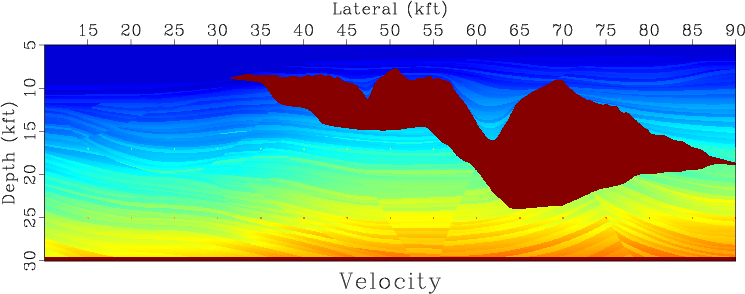

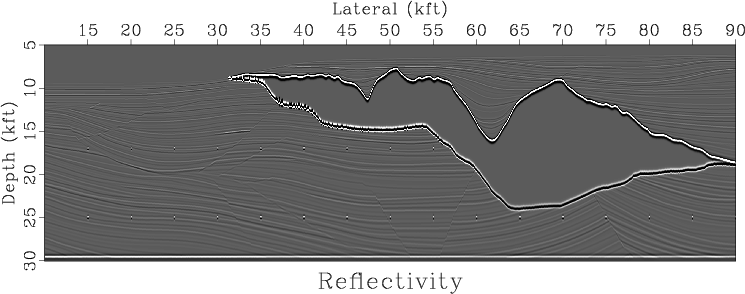

Program of the month: sfai2refl

August 2, 2013 Programs No comments

sfai2refl converts acoustic impedance to normal-incidence PP reflectivity using the simple equation

$$R(t) = \frac{I(t+\Delta t) – I(t)}{I(t+\Delta t) + I(t)}$$

The program is useful for convolution modeling. The following example from rsf/rsf/wedge shows a classic example of wedge modeling, which is used for analyzing the tuning phenomenon (Kallweit, R. and L. Wood, 1982, The limits of resolution of zero-phase wavelets: Geophysics, v. 47, 1035-1046.)

A more sophisticated example from geo384w/hw5/sigsbee2 computes an effective reflectivity of the Sigsbee model:

The program has no parameters.