A new paper is added to the collection of reproducible documents: Application of principal component analysis in weighted stacking of seismic data

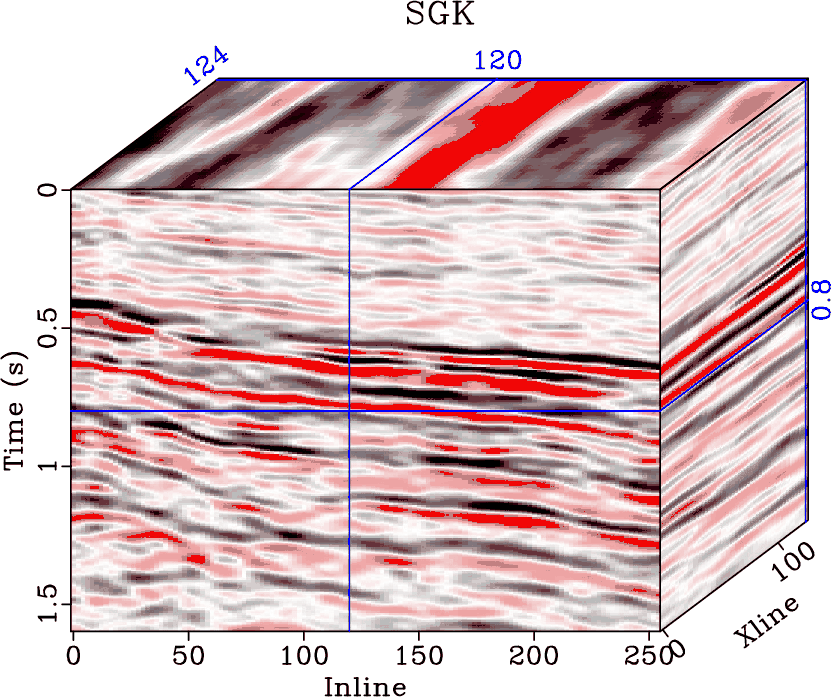

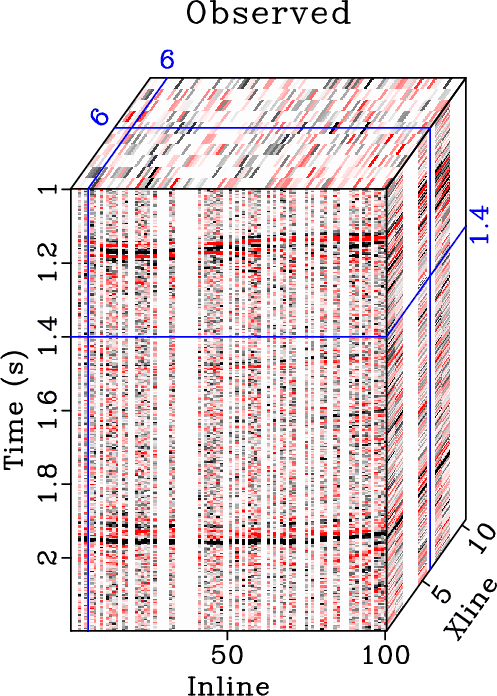

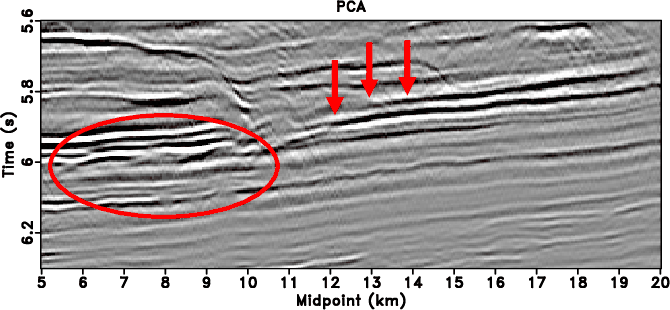

Optimal stacking of multiple datasets plays a significant role in many scientific domains. The quality of stacking will affect the signal-to-noise ratio (SNR) and amplitude fidelity of the stacked image. In seismic data processing, the similarity-weighted stacking makes use of the local similarity between each trace and a reference trace as the weight to stack the flattened prestack seismic data after normal moveout (NMO) correction. The traditional reference trace is an approximated zero-offset trace that is calculated from a direct arithmetic mean of the data matrix along the spatial direction. However, in the case that the data matrix contains abnormal mis-aligned trace, erratic and non-gaussian random noise, the accuracy of the approximated zero-offset trace would be greatly affected, thereby further influence the quality of stacking. We propose a novel weighted stacking method that is based on principal component analysis (PCA). The principal components of the data matrix, namely the useful signals, are extracted based on a low-rank decomposition method by solving an optimization problem with a low-rank constraint. The optimization problem is solved via a common singular value decomposition algorithm. The low-rank decomposition of the data matrix will alleviate the influence of abnormal trace, erratic and non-gaussian random noise, thus will be more robust than the traditional alternatives. We use both synthetic and field data examples to show the successful performance of the proposed approach.