|

|

|

|

CuQ-RTM: A CUDA-based code package for stable and efficient Q-compensated reverse time migration |

Next: Summary Up: Wang et al, 2019: Previous: -RTM on field data

An open-source code package cu![]() -RTM presented in this chapter is designed for efficient and stable viscoacoustic imaging in attenuating media. The package utilizes streamed CUFFT, CATRC scheme and adaptive stabilization to pertinently tackle some well known problems in viscoacoustic imaging such as intensive computations, large storage requirements and frequent disk I/O, and instability. Each of these issues has been discussed in the literature, notably an efficient implementation of 3D FFTs across multiple-GPU systems (Czechowski et al., 2012; Nandapalan et al., 2012), memory-saving reconstruction schemes (Anderson et al., 2012; Wang et al., 2017b; Yang et al., 2016), and stabilized compensation strategies (Sun and Zhu, 2015; Zhu et al., 2014; Wang et al., 2017a). The proposed package aims at utilizing a set of the state-of-art strategies to achieve an efficient, storage-saving and stable

-RTM presented in this chapter is designed for efficient and stable viscoacoustic imaging in attenuating media. The package utilizes streamed CUFFT, CATRC scheme and adaptive stabilization to pertinently tackle some well known problems in viscoacoustic imaging such as intensive computations, large storage requirements and frequent disk I/O, and instability. Each of these issues has been discussed in the literature, notably an efficient implementation of 3D FFTs across multiple-GPU systems (Czechowski et al., 2012; Nandapalan et al., 2012), memory-saving reconstruction schemes (Anderson et al., 2012; Wang et al., 2017b; Yang et al., 2016), and stabilized compensation strategies (Sun and Zhu, 2015; Zhu et al., 2014; Wang et al., 2017a). The proposed package aims at utilizing a set of the state-of-art strategies to achieve an efficient, storage-saving and stable ![]() -RTM. Here we discuss the superior performance of the CATRC scheme and adaptive stabilization over conventional methods. Unlike conventional effective boundary-saving strategy using finite-differences (FD) (

-RTM. Here we discuss the superior performance of the CATRC scheme and adaptive stabilization over conventional methods. Unlike conventional effective boundary-saving strategy using finite-differences (FD) (![]() grid points FD stencil), which requires

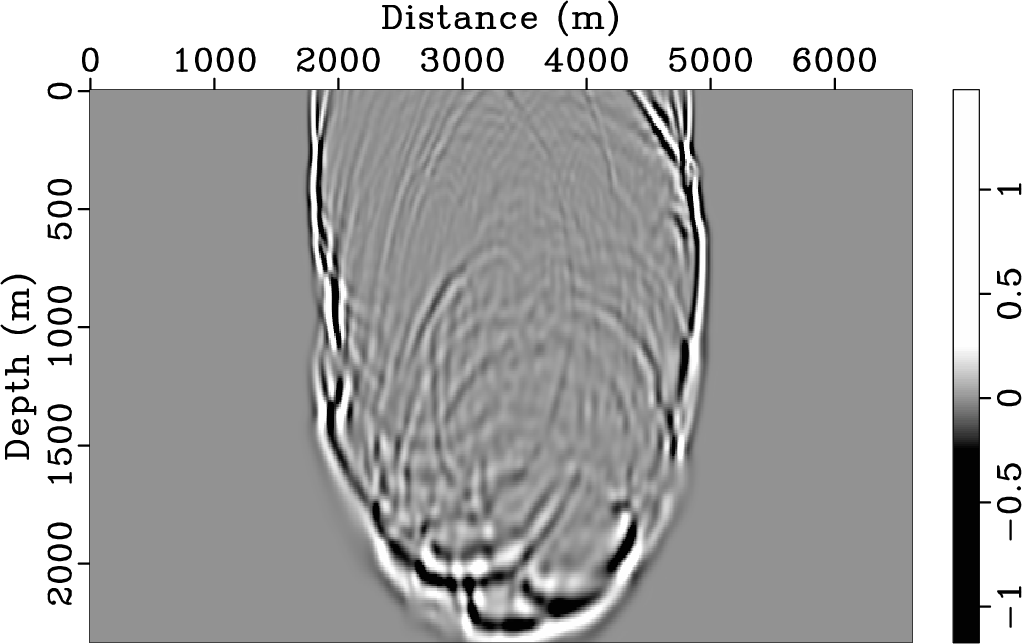

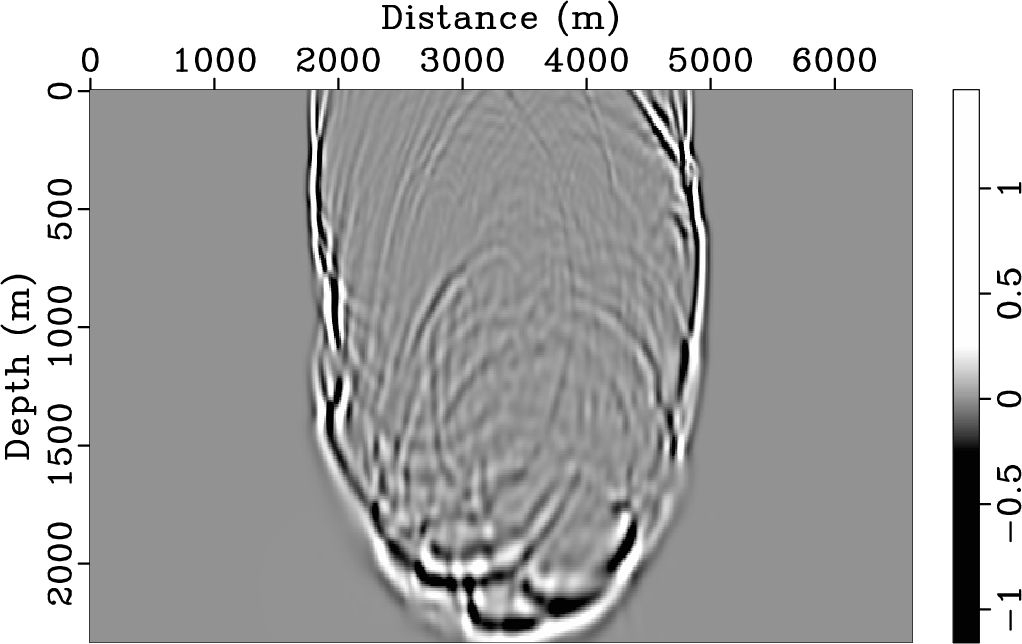

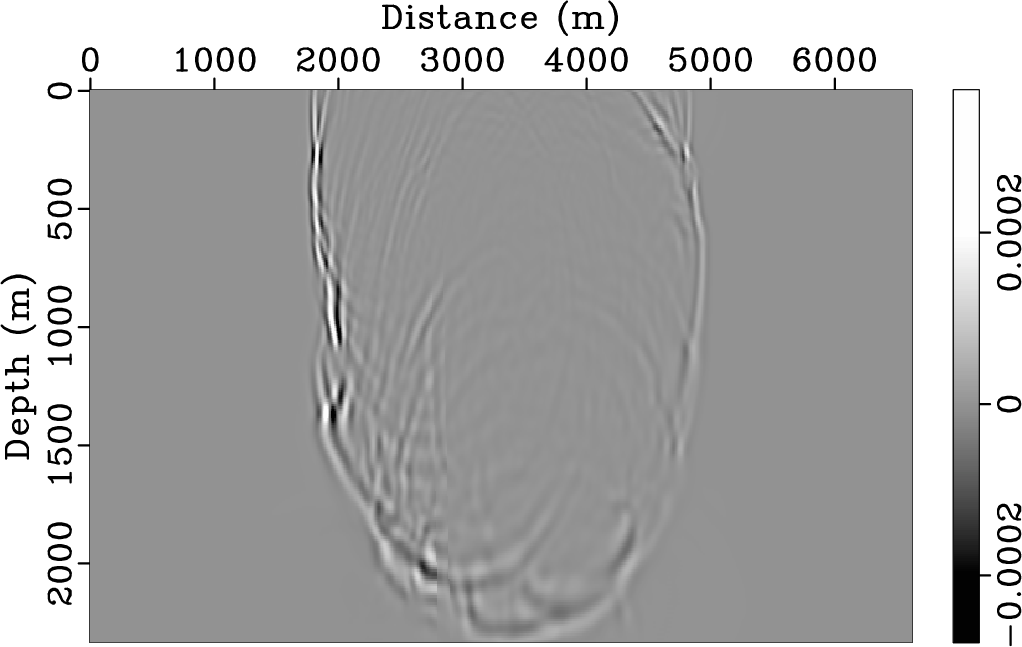

grid points FD stencil), which requires ![]() layers of boundary wavefields at each time step, the proposed CATRC scheme stores the outermost layers of boundary wavefields at each time step plus states at the checkpoints and the last two time steps to the reconstructed source wavefield for performing crosscorrelating imaging condition, without much loss of precision. Figure 12 shows source snapshots, reconstructed snapshots and their differences from the Marmousi model at two propagation times. It demonstrates that the reconstructed wavefields from CATRC are accurate enough for

layers of boundary wavefields at each time step, the proposed CATRC scheme stores the outermost layers of boundary wavefields at each time step plus states at the checkpoints and the last two time steps to the reconstructed source wavefield for performing crosscorrelating imaging condition, without much loss of precision. Figure 12 shows source snapshots, reconstructed snapshots and their differences from the Marmousi model at two propagation times. It demonstrates that the reconstructed wavefields from CATRC are accurate enough for ![]() -RTM.

-RTM.

|

|---|

|

Fig12a_v,Fig12b_v,Fig12c_v,Fig12d_v,Fig12e_v,Fig12f_v

Figure 12. Forward snapshots, reconstructed snapshots and their differences from Marmousi model (see Figure 5) at two propagation times: (a)-(c) |

|

|

As this chapter mainly focuses on ![]() -RTM and its GPU implementation, we do not pay much attention to the numerical simulation of spatially varying fractional power of Laplacian operators. There are effective proposed schemes to handle spatial variable-order fractional Laplacians (Wang et al., 2018c; Sun et al., 2014; Chen et al., 2016; Li et al., 2016; Wang et al., 2018a). Chen et al. (2016) proposed two efficient methods to calculate spatial variable-order fractional Laplacians, i.e., Taylor-expansion approximation scheme and Low-rank decomposition scheme. Wang et al. (2018a) extended the Taylor-expansion approximation scheme to the viscoelastic case. All of these methods come at the expense of computational efficiency. In this code package, the averaged strategy (Zhu et al., 2014) is used to achieve a quick solution. Improving the accuracy of the code package will be my future work.

-RTM and its GPU implementation, we do not pay much attention to the numerical simulation of spatially varying fractional power of Laplacian operators. There are effective proposed schemes to handle spatial variable-order fractional Laplacians (Wang et al., 2018c; Sun et al., 2014; Chen et al., 2016; Li et al., 2016; Wang et al., 2018a). Chen et al. (2016) proposed two efficient methods to calculate spatial variable-order fractional Laplacians, i.e., Taylor-expansion approximation scheme and Low-rank decomposition scheme. Wang et al. (2018a) extended the Taylor-expansion approximation scheme to the viscoelastic case. All of these methods come at the expense of computational efficiency. In this code package, the averaged strategy (Zhu et al., 2014) is used to achieve a quick solution. Improving the accuracy of the code package will be my future work.

Another critical issue is the constructed architecture and parallelism strategy of the cu![]() -RTM code package. The architecture of the cu

-RTM code package. The architecture of the cu![]() -RTM code package has been discussed in detail, which can be separated into four components: memory manipulation, kernel, module and multi-level parallelism. Task-oriented kernels form several fully functional modules, and these modules are further integrated into a complete process of

-RTM code package has been discussed in detail, which can be separated into four components: memory manipulation, kernel, module and multi-level parallelism. Task-oriented kernels form several fully functional modules, and these modules are further integrated into a complete process of ![]() -RTM. The package contains 2D seismic imaging schemes on both compensated and non-compensated frames with adaptive stabilization and low-pass filtering strategies. We can execute

-RTM. The package contains 2D seismic imaging schemes on both compensated and non-compensated frames with adaptive stabilization and low-pass filtering strategies. We can execute ![]() -RTM in a flexible manner by choosing a series of flags responsible for switching among different scenarios without any code modifications. In this sense, cu

-RTM in a flexible manner by choosing a series of flags responsible for switching among different scenarios without any code modifications. In this sense, cu![]() -RTM provides a general GPU-based framework to ensure a time- and memory-efficient implementation. The proposed cu

-RTM provides a general GPU-based framework to ensure a time- and memory-efficient implementation. The proposed cu![]() -RTM is implemented in an MLP manner to take advantages of all the CPUs and GPUs available, while maintaining impressively good stability and flexibility. Whether for a single shot test or a complete simulation, with only a single machine containing seven Nvidia GPU cards, cu

-RTM is implemented in an MLP manner to take advantages of all the CPUs and GPUs available, while maintaining impressively good stability and flexibility. Whether for a single shot test or a complete simulation, with only a single machine containing seven Nvidia GPU cards, cu![]() -RTM consistently provides speedup factors approaching or exceeding 50 times compared to conventional CPU-only implementations. My package is particularly well suited to

-RTM consistently provides speedup factors approaching or exceeding 50 times compared to conventional CPU-only implementations. My package is particularly well suited to ![]() -RTM where multiple shots are run on clusters with multiple GPUs per node.

-RTM where multiple shots are run on clusters with multiple GPUs per node.

Shared memory is expected to be much faster than global memory, which enables direct GPU-to-GPU transfers (Jaros et al., 2012; Nandapalan et al., 2012). Many researchers proposed shared memory strategy for GPU parallel computing to improve computational efficiency (Liu et al., 2012; Nandapalan et al., 2012; Micikevicius, 2009; Jaros et al., 2012; Liu et al., 2013). In the cu![]() -RTM code package, we adopt streamed CUFFT to improve computational efficiency with no domain decomposition and GPU-to-GPU transfers involved. For this reason we do not take shared memory strategy into considersation, which might be considered as an improvment in a future version.

-RTM code package, we adopt streamed CUFFT to improve computational efficiency with no domain decomposition and GPU-to-GPU transfers involved. For this reason we do not take shared memory strategy into considersation, which might be considered as an improvment in a future version.

Apart from outlining the architecture of the cu![]() -RTM code package and underlining some program optimization schemes, we also provide speedup analysis and strong scaling test on synthetic models. With only a single Nvidia GPU card, the presented cu

-RTM code package and underlining some program optimization schemes, we also provide speedup analysis and strong scaling test on synthetic models. With only a single Nvidia GPU card, the presented cu![]() -RTM code package can be 50-80 times faster than the state-of-art distributed CPU implementation running on a single CPU core. We also find that GPU-based simulation on larger model scale tends to reach higher speedup ratio compared with that of small-scale simulation. Objectively speaking, an abusolute speedup ratio without considering the hardware that we used is not really a “fair” comparison. In this study, we test the package on a GeForce GTX760 GPU and comapre it with the conventional CPU implementation running on a single core of Intel Core i5-4460 CPU. If a more modern CPU system or a better GPU card is used, the speedup ratio would be much lower or higher than that we claimed in this study. Regarding the scaling test on multi-GPUs, the provided code package exhibits excellent scalability and can be run with almost ideal code performance in part because communications are almost entirely overlapped with calculations. My package's architecture is designed to mimic how a geophysicist writes down a seismic processing module such as modeling, imaging, and inversion. By utilizing the streamed CUFFT, the most time-consuming part of the pseudo-spectral simulation can be computed synchronously on each device, which improves performance and lends itself naturally to the future implementation of more complicated (

-RTM code package can be 50-80 times faster than the state-of-art distributed CPU implementation running on a single CPU core. We also find that GPU-based simulation on larger model scale tends to reach higher speedup ratio compared with that of small-scale simulation. Objectively speaking, an abusolute speedup ratio without considering the hardware that we used is not really a “fair” comparison. In this study, we test the package on a GeForce GTX760 GPU and comapre it with the conventional CPU implementation running on a single core of Intel Core i5-4460 CPU. If a more modern CPU system or a better GPU card is used, the speedup ratio would be much lower or higher than that we claimed in this study. Regarding the scaling test on multi-GPUs, the provided code package exhibits excellent scalability and can be run with almost ideal code performance in part because communications are almost entirely overlapped with calculations. My package's architecture is designed to mimic how a geophysicist writes down a seismic processing module such as modeling, imaging, and inversion. By utilizing the streamed CUFFT, the most time-consuming part of the pseudo-spectral simulation can be computed synchronously on each device, which improves performance and lends itself naturally to the future implementation of more complicated (![]() -compensated) LSRTM and FWI (Jaros et al., 2016a; Yang et al., 2015) on the GPU. Future work may also generalize to the 3D case and incorporate more efficient reconstruction scheme, while a further investigation of alternative or improved 2D and 3D FFTs techniques across multiple GPUs (Jaros et al., 2016b,2012) may also prove worthwhile.

-compensated) LSRTM and FWI (Jaros et al., 2016a; Yang et al., 2015) on the GPU. Future work may also generalize to the 3D case and incorporate more efficient reconstruction scheme, while a further investigation of alternative or improved 2D and 3D FFTs techniques across multiple GPUs (Jaros et al., 2016b,2012) may also prove worthwhile.